Today STREAM just uploaded its first manuscript into medRxiv, a preprint server.

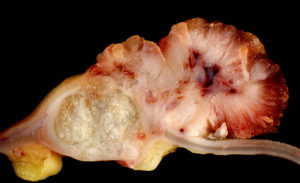

The manuscript, which can be accessed here, traces the clinical development process of a very uninteresting cancer drug you never heard of, ixabepilone (approved in USA, not in Europe). Our goal was to estimate the total patient benefit and burden associated with unlocking the therapeutic activity of this drug.

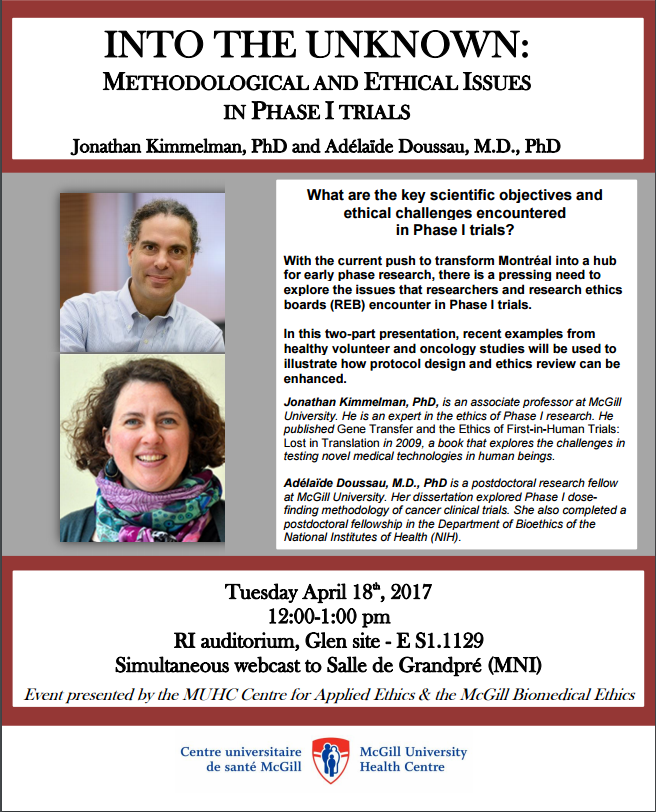

In research, the impulse is to study interesting things – in the case of drugs, cool big impact drugs like sunitinib, pembrolizumab, imatinib. We do that all the time in STREAM. Why study uninteresting drugs? Well, because interesting things are, by definition, exceptional. That means a lot of patients participate in, and resources are expended on, research involving of uninteresting drugs (how many? stay tuned for a forthcoming assessment from STREAM). As an aside, arguably we bioethicists and – at least historically – science and technology study types probably spend too much time thinking about exceptional technologies (CRISPR/Cas9) and not enough with the mundane (e.g. asthma inhalers).1

What did we discover in our study of ixabepilone?

First, as with the interesting drugs sunitinib and sorafenib, members of yesterday’s wunderkind class of tyrosine kinase inhibitors, drug developers unlocked the clinical utility of ixabepilone with incredible efficiency. That is, the first indication they put into testing was the first indication to get an FDA approval. So much for dismissing preclinical and early phase research as hopelessly biased and misleading.

Second, as with sunitinib and sorafenib, drug developers spent a lot of energy trying to extend ixabepilone to other indications. Not as much as sunitinib and sorafenib, but still lots (17 different indications). Post approval trials – which typically try to access fruit higher on the tree – were mostly a bust, leading to lots of harm but no new FDA approvals.

Third, we found that summed across the whole drug development program, 16% of patients experienced objective response (i.e. tumour shrinkage, a quick and dirty way of assessing benefit); 2.2% experienced drug related fatalities. This compares with 16% and 1% of patients participating in sunitinib trials, and 12% and 2.2% for sorafenib. So to be clear: overall, risk and burden associated with developing a barely useful drug, ixabepilone, is pretty much the same as that for developing breakthrough drugs sunitinib and sorafenib.

Finally, about a quarter of trials in our sample were deemed uninformative using prespecified criteria. That compares with the figure of 26% in our study of sorafenib.

There are lots of normative and policy implications to unpack here. We leave that for later work. For now, we close with a few words about medRxiv. Publishing here is STREAM’s way of overcoming the obstacles to getting our novel work published in a peer reviewed journal. We completed this manuscript in 2015, and submitted it to six different venues. Several were high impact venues, and so desk rejection was not unexpected. Two journals were pretty low impact, specialty cancer venues. In one case, the desk rejection took three months, only to learn that editors were “unable to find referees,” despite our several attempts to suggest different referees. (?!) Our worst experience with this was at J Clin Epi. We submitted the manuscript in September 2015 and received a notice of rejection – after multiple queries as to its status – in March 2016 (6 months). There, the paper was favourably received. No objections about the methodology and study quality. But the editor felt the piece duplicated our work on sunitinib (a bit like saying that a randomized trial of a drug in lung cancer is duplicative of a randomized trial of a totally unrelated drug in lung cancer), and also because our conclusions were based on only one drug (well yeah. Our abstract explains we set out to study one drug. Kind of like meta-analysis, now that I think about it. If that was a concern, a desk rejection would have saved us and the two referees a lot of headache).

Thereafter, this important article sat on our hard drive,

until my PhD student (now graduated) proposed depositing it on medRxiv. STREAM

has many other manuscripts sitting on our hard drives because of serial

rejection arising from editorial discretion, and not due (if we may say so

ourselves) to the quality of our work. This is a waste of research efforts and

resources, a drain on morale, and it constitutes a threat to the validity of

the scientific literature by contributing to a certain kind of publication

bias. In the coming years, we will be aiming to upload our serially rejected

work on preprint archives in order to ensure that important, but unpopular

research papers are available to the scientific community.

References

1. Timmermans, S. and Berg, M. (2003), The practice of medical technology. Sociology of Health & Illness, 25: 97-114. doi:10.1111/1467-9566.00342

BibTeX

@Manual{stream2019-1786,

title = {STREAM goes Preprint with an Analysis of the Development of the Anti-cancer drug Ixabepilone},

journal = {STREAM research},

author = {Amanda MacPherson},

address = {Montreal, Canada},

date = 2019,

month = aug,

day = 1,

url = {http://www.translationalethics.com/2019/08/01/stream-goes-preprint-with-an-analysis-of-the-development-of-the-anti-cancer-drug-ixabepilone/}

}

MLA

Amanda MacPherson. "STREAM goes Preprint with an Analysis of the Development of the Anti-cancer drug Ixabepilone" Web blog post. STREAM research. 01 Aug 2019. Web. 22 Oct 2024. <http://www.translationalethics.com/2019/08/01/stream-goes-preprint-with-an-analysis-of-the-development-of-the-anti-cancer-drug-ixabepilone/>

APA

Amanda MacPherson. (2019, Aug 01). STREAM goes Preprint with an Analysis of the Development of the Anti-cancer drug Ixabepilone [Web log post]. Retrieved from http://www.translationalethics.com/2019/08/01/stream-goes-preprint-with-an-analysis-of-the-development-of-the-anti-cancer-drug-ixabepilone/